ChatGTP does not have a brain, but it tries to output texts that are formulated as if they were written by a human. But how does ChatGTP do that?

It is perhaps the first question that should be asked to ChatGTP:

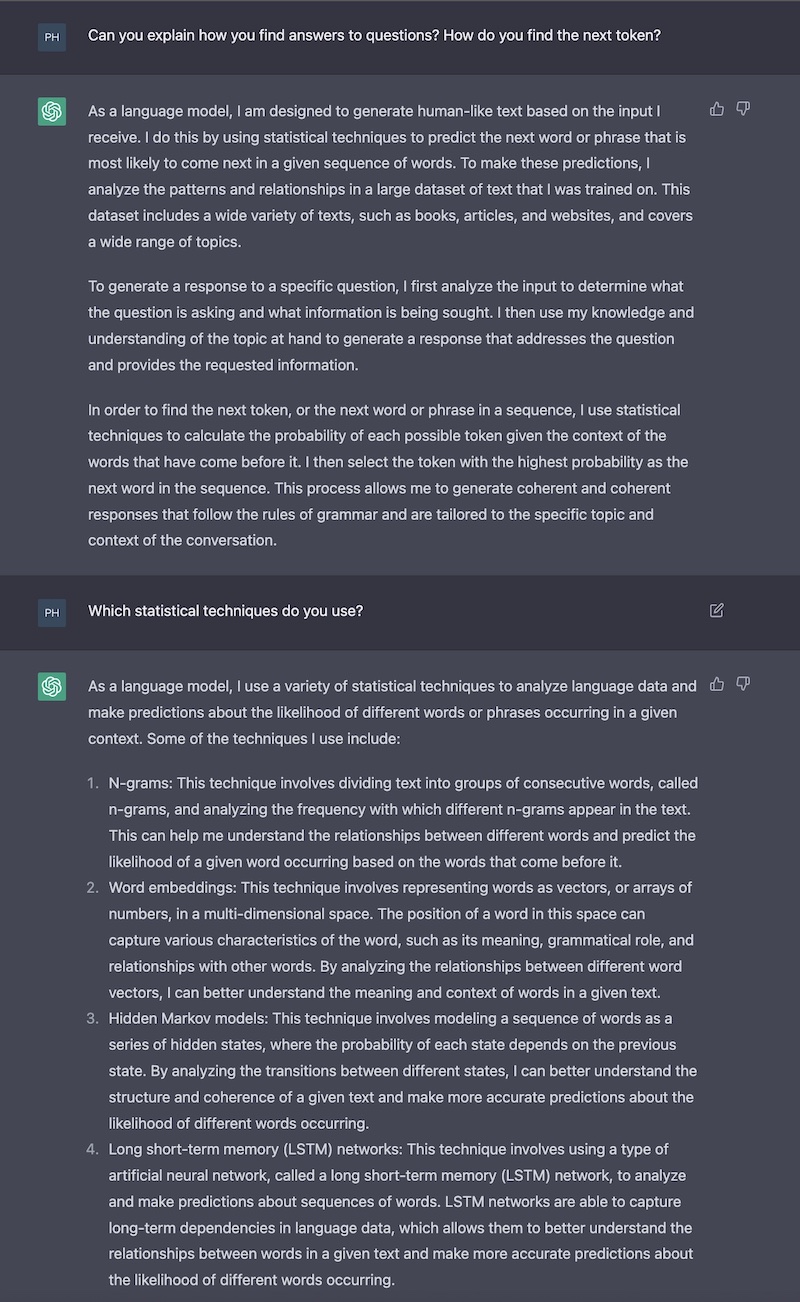

Can you explain how you find answers to questions?

ChatGTP's answer is detailed and precise:

As a language model, I am designed to generate human-like text based on the input I receive. I do this by using statistical techniques to predict the next word or phrase that is most likely to come next in a given sequence of words. To make these predictions, I analyze the patterns and relationships in a large dataset of text that I was trained on. This dataset includes a wide variety of texts, such as books, articles, and websites, and covers a wide range of topics.

To generate a response to a specific question, I first analyze the input to determine what the question is asking and what information is being sought. I then use my knowledge and understanding of the topic at hand to generate a response that addresses the question and provides the requested information.

In order to find the next token, or the next word or phrase in a sequence, I use statistical techniques to calculate the probability of each possible token given the context of the words that have come before it. I then select the token with the highest probability as the next word in the sequence. This process allows me to generate coherent and coherent responses that follow the rules of grammar and are tailored to the specific topic and context of the conversation.

The answer is very general, and I would like to know a little more about the techniques used:

Which statistical techniques do you use?

As a language model, I use a variety of statistical techniques to analyze language data and make predictions about the likelihood of different words or phrases occurring in a given context. Some of the techniques I use include:

1. N-grams: This technique involves dividing text into groups of consecutive words, called n-grams, and analyzing the frequency with which different n-grams appear in the text. This can help me understand the relationships between different words and predict the likelihood of a given word occurring based on the words that come before it.

2. Word embeddings: This technique involves representing words as vectors, or arrays of numbers, in a multi-dimensional space. The position of a word in this space can capture various characteristics of the word, such as its meaning, grammatical role, and relationships with other words. By analyzing the relationships between different word vectors, I can better understand the meaning and context of words in a given text.

3. Hidden Markov models: This technique involves modeling a sequence of words as a series of hidden states, where the probability of each state depends on the previous state. By analyzing the transitions between different states, I can better understand the structure and coherence of a given text and make more accurate predictions about the likelihood of different words occurring.

4. Long short-term memory (LSTM) networks: This technique involves using a type of artificial neural network, called a long short-term memory (LSTM) network, to analyze and make predictions about sequences of words. LSTM networks are able to capture long-term dependencies in language data, which allows them to better understand the relationships between words in a given text and make more accurate predictions about the likelihood of different words occurring.

Add new comment